Jiaojiao FanI am a Research Scientist at NVIDIA Deep Imagination Research in Santa Clara, California, where I work on world foundation models. Currently, I am working on improving Cosmos, NVIDIA's open-sourced groundbreaking world foundation model for Physical AI. I completed my PhD in Machine Learning at Georgia Institute of Technology in 2024, where I was advised by Prof. Yongxin Chen. During my PhD, I was fortunate to do internships at NVIDIA research, Snap Creative Vision Research, and Microsoft Research. During my NVIDIA internship, I developed customization capabilities for the Edify text-to-image generation model, which was successfully shipped as part of the Edify model family and deployed by enterprise clients for advertising applications. Edify customization enables extremely photorealistic image generation for human personalization tasks (e.g., from reference photo to customized output). |

|

NewsJun 2025

Cosmos Predict2 release! SOTA world model for autonomous driving and robot arm manipulation.

Jan 2025

Cosmos Predict1 release! First open source large scale world model!

Nov 2024

Edify image paper was released! Photorealistic text-to-image generation with pixel-space laplacian pyramid diffusion.

Jul 2024

Graduated from Georgia Tech and joined Nvidia as a research scientist working on NVIDIA GenAI, specifically Edify text-to-image model post-training.

May 2022

ICML22 Wasserstein gradient flow in pixel space is accepted!

|

Selected PublicationsSee Google Scholar for the full list of publications → |

|

Cosmos World Foundation Model Platform for Physical AINVIDIA White paper, 2025 arxiv / code / website / Jensen Huang keynote at CES2025 / NVIDIA’s open-source video world model platform for Physical AI represents a foundational breakthrough in world modeling. Cosmos enables developers to customize, and deploy world foundation models that can understand and predict physical interactions in video sequences |

|

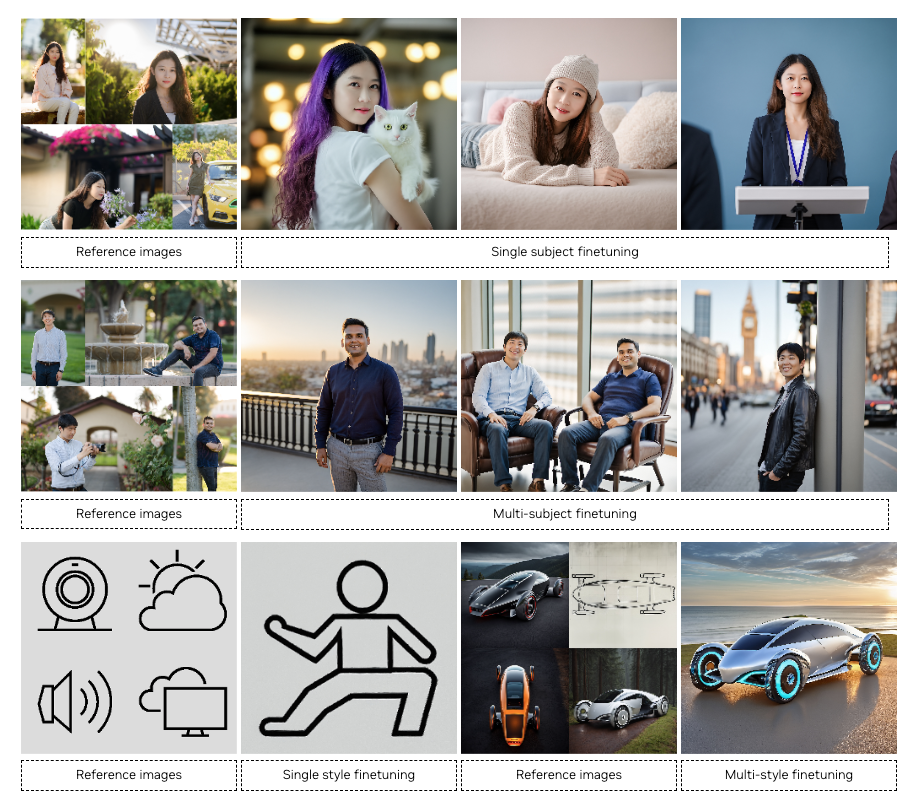

Edify Image: High-Quality Image Generation with Pixel Space Laplacian Diffusion ModelsNVIDIA White paper, 2024 arxiv / website / Edify Image is a family of cascaded pixel-space diffusion text-to-image models capable of generating photorealistic images. Edify Image delivers exceptional human personalization results with remarkable identity consistency. The model also supports style transfer, 4K upsampling, ControlNets, and 360° HDR panorama generation |

|

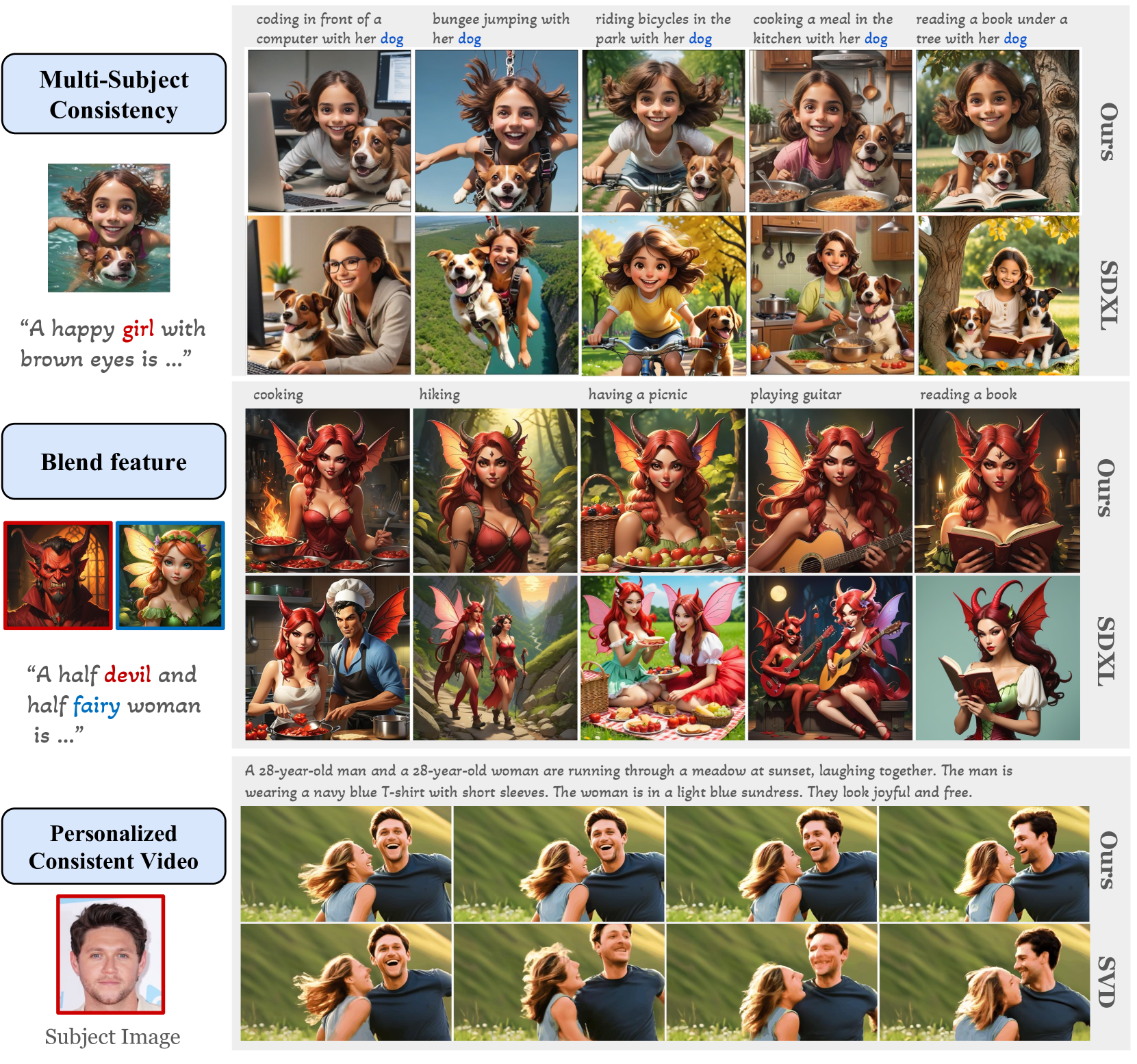

RefDrop: Controllable Consistency in Image or Video Generation via Reference Feature GuidanceJiaojiao Fan, Haotian Xue, Qinsheng Zhang, Yongxin Chen NeurIPS, 2024 arxiv / website / RefDrop is a training-free method for controlling consistency in image and video generation. By manipulating attention modules in diffusion models, RefDrop enables consistent character generation, multiple subject blending, diverse content creation, and enhanced temporal consistency in videos |

|

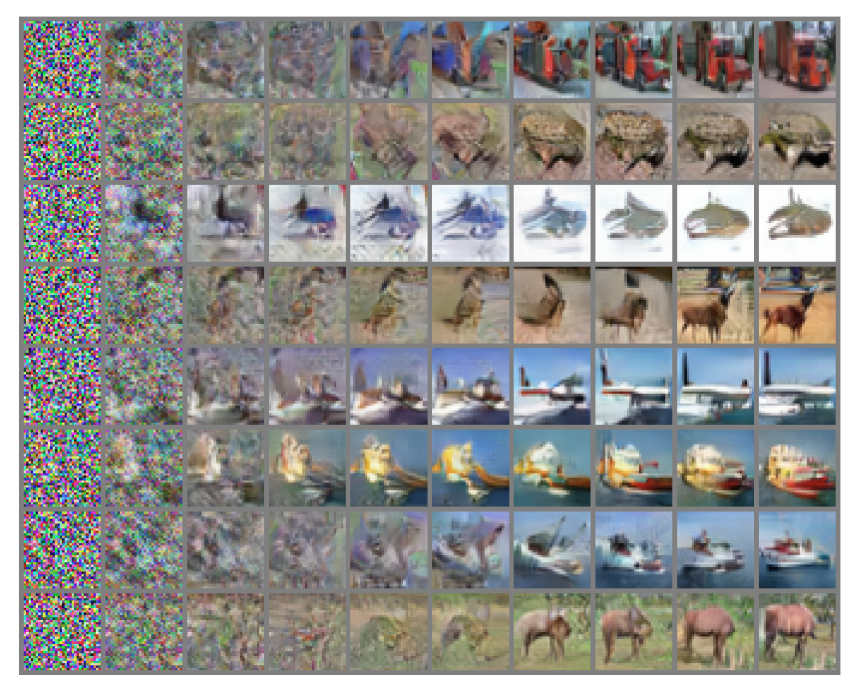

Variational Wasserstein Gradient FlowJiaojiao Fan, Qinsheng Zhang, Amirhossein Taghvaei, Yongxin Chen ICML, 2022 arxiv / code / A scalable approach to Wasserstein gradient flow using variational formulations |

|

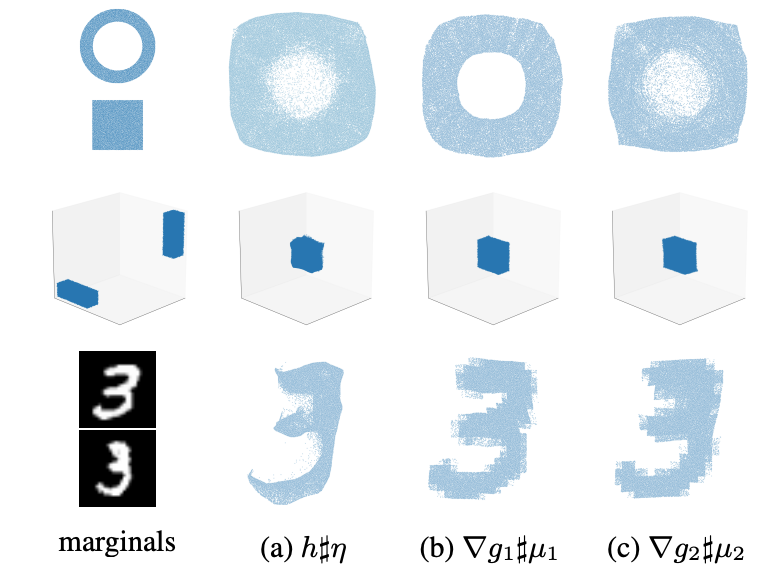

Scalable Computations of Wasserstein Barycenter via Input Convex Neural NetworksJiaojiao Fan, Amirhossein Taghvaei, Yongxin Chen ICML (long talk), 2020 arxiv / code / A novel scalable algorithm for computing Wasserstein Barycenters using Input Convex Neural Networks |

|

Design and source code from Leonid Keselman's website |